Paperspace setup

Most people don’t have a GPU installed in their working machine that is suited for Deep Learning, and in fact you don’t need to. It’s quite easy to setup a remote GPU server nowadays, and in this blog I will explain how to do so with Paperspace Gradient.

I started using Paperspace because of a recommendation from Jeremy Howard in his Live Coding Videos. If you haven’t seen these lectures, I can highly recommend them. They are a great resource on many things related to getting started with Deep Learning. Jeremy shows a lot of productivity hacks and practical tips on getting a good setup.

However, the Paperspace setup explanations are a bit out-dated which can lead to confusion when following along with the video’s. Also, after the recording of the videos Jeremy created some nice scripts which simplify the setup. This blog will hopefully help others to navigate this and quickly set-up a remote GPU server. I would advice anybody who wants to try Paperspace, to first watch the videos from Jeremy to have a general idea of how it works, and then follow these steps to quickly get set-up.

Once you have signed up to Paperspace, go to their Gradient service and create a new project. Paperspace has a free tier, as well as a pro- ($8/month) and growth-plan ($39/month). I personally signed up for the pro-plan, which has a very good value for money. You get 15Gb persistent storage and free Mid instance types. If available, I use the A4000, which is the fastest and comes with 16GB of GPU memory.

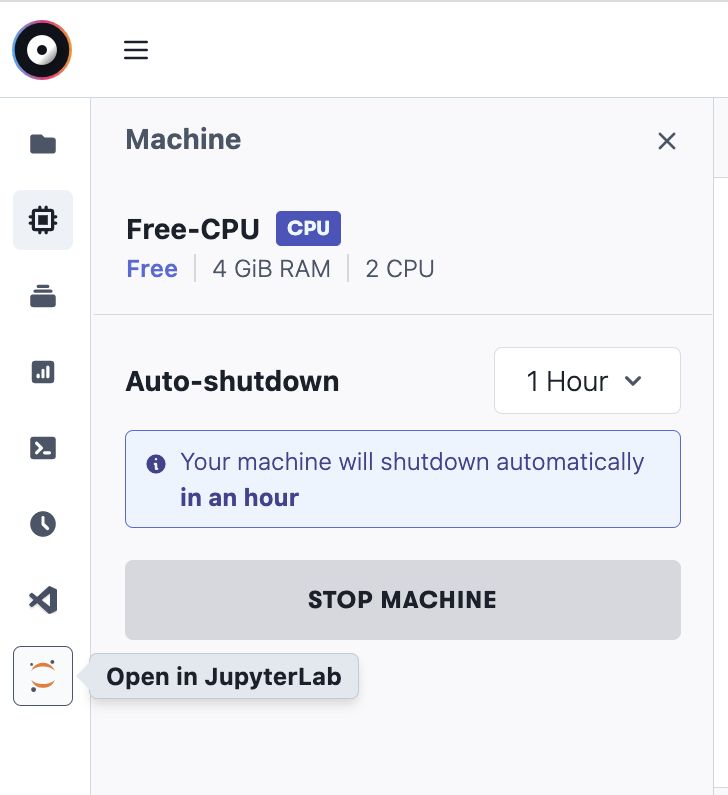

Paperspace has both free and paid servers. The free ones come with a 6 hour usage limit, after that they are automatically shut down. The paid servers you can use as long as you like. Sometimes the free servers are out of capacity, which is a bit annoying. In my experience however most of the time I’m able to get what I need.

With the pro-plan you can create up to 3 servers, or “Notebooks” as they are called by Paperspace (throughout this blog I’ll refer to them as Notebook Servers). So let’s create one:

- Select the “Fast.AI” runtime

- Select a machine, for example the Free-A4000. You can always change this when you restart your machine

- Remove the Workspace URL under the advanced options to create a totally empty server

- Navigate to JupyterLab

First look at our Notebook Server

Next, let’s open a terminal and get familiar with our Server

Terminal

> which python

/usr/local/bin/python

> python --version

Python 3.9.13And let’s also check the PATH variable:

Terminal

> echo $PATH

/usr/local/nvidia/bin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin: /usr/sbin:/usr/bin:/sbin:/bin:/root/mambaforge/binThe python command is thus pointing to the system Python installation. However, on the PATH variable we are also seeing an entry at the end mentioning mambaforge.

And indeed we can execute:

Terminal

> mamba list | grep python

ipython 8.5.0 pyh41d4057_1 conda-forge

ipython_genutils 0.2.0 py_1 conda-forge

python 3.10.6 h582c2e5_0_cpython conda-forge

python-dateutil 2.8.2 pyhd8ed1ab_0 conda-forge

python-fastjsonschema 2.16.2 pyhd8ed1ab_0 conda-forge

python_abi 3.10 2_cp310 conda-forgeSo we are having both a mamba based Python 3.10.6 and a system installation of Python 3.9.13.

Let’s open a Jupyter Notebook and see which Python version is running:

Untitled.ipynb

import sys

sys.versionWhich returns: '3.9.13 (main, May 23 2022, 22:01:06) \n[GCC 9.4.0]'. Jupyter is thus running the system Python installation.

In the videos Jeremy mentions that we should never use the system Python but instead always create a Mamba installation. However, since we are working here on a virtual machine that is only used for running Python, this shouldn’t be a problem. Just be aware that we are using the system Python which is totally separate from the Mamba setup.

Since we are running the system Python version, we can inspect all the packages that are installed:

Terminal

> pip list

...

fastai 2.7.10

fastapi 0.92.0

fastbook 0.0.28

fastcore 1.5.27

fastdownload 0.0.7

fastjsonschema 2.15.3

fastprogress 1.0.3

...

torch 1.12.0+cu116

torchaudio 0.12.0+cu116

torchvision 0.13.0+cu116

...Persisted Storage at Paperspace

In general, things are not persisted on Paperspace. So anything we store during a session, will be gone when we restart our Notebook Server. However, Paperspace comes with two special folders that are persisted. It’s important to understand how these folder works since we obviously need to persist our work. Not only that, but we also need to persist our configuration files from services lik GitHub, Kaggle and HuggingFace and potentially any other config files for tools or services we are using.

The persisted folders are called /storage and /notebooks. Anything in our /storage is shared among all the Notebook Servers we are running, whereas anything that is stored in the /notebooks folder is only persisted on that specific Notebook Server.

Set up

In the first few videos, Jeremy shows a lot of tricks on how to install new packages and set up things like Git and GitHub. After the recording of these videos, he made a GitHub repo which facilitates this setup greatly and makes most of the steps from the videos unnecessary. So let’s use that:

Terminal

> git clone https://github.com/fastai/paperspace-setup.git

> cd paperspace-setup

> ./setup.shTo understand what this does, let’s have a look at setup.sh:

setup.py

#!/usr/bin/env bash

mkdir /storage/cfg

cp pre-run.sh /storage/

cp .bash.local /storage/

echo install complete. please start a new instanceFirst it’s creating a new directory inside of our /storage folder called cfg. As we will see, this is where we will store all our configuration files and folders.

Next, the script copies 2 files to our storage folder. Let’s have a closer look at those

pre-run.sh

During startup of a Notebook Server (upon creation or restart), Paperspace automatically executes the script it finds at /storage/pre-run.sh. This is really neat, since we can create a script at this location to automate our setup!

For the full script, click here, and let’s have a closer look at this first snippet:

pre-run.sh (snippet)

So we are iterating through a list of folder names (.local .ssh ...) on line 1, and for each one we create a directory inside of /storage/cfg on line 4. We only do this if the directory doesn’t already exist on line 3. Next, each of these folders is symlinked to the home directory (~/) on line 7.

This means that:

- When we store something in any of these symlinked folders (e.g.

~/.local), it’s actually being written to the associated storage folder (e.g./storage/cfg/.local) because of the symlink. - Whenever we restart our Notebook Server, all the stuff that has previously been persisted (e.g. in

/storage/cfg/.local) are made available again in the home directory (e.g.~/.local).

This is very nice, because as it turns out: many tools keep their configuration files in this home folder. So by persisting this data, they will keep working across restarts of our Notebook servers.

Let’s a closer look at the folders we are persisting:

.local

We saw before that the FastAI runtime comes with a number of installed Python packages. If we want to install additional packages, we could do: pip install <package>. However, pip installs the packages in /usr/local/lib, and are thus not persisted. To make sure our packages are persisted, we can instead install with pip install --user <package>. This --user flag, tells pip to install the package only for the current user, and so it installs into the ~/.local directory. So by persisting this folder, we make sure that we our custom installed python packages are persisted, awesome!

.ssh

To authenticate with GitHub without using passwords, we use ssh keys. To create a pair of keys, we run: ssh-keygen. This creates the private key (id_rsa) and the public key (id_rsa.pub) to the ~/.ssh folder. Once we upload the public key to GitHub we can authenticate with GitHub, and by persisting this folder we can authenticate upon restart!

By now you probably get the idea, any of these folders represent a certain configuration we want to persist:

.conda: contains conda/mamba installed packages.kaggle: contains akaggle.jsonauthentication file.fastai: contains downloaded datasets and some other configuration.config,.ipythonand.jupyter: contain config files for various pieces of software such as matplotlib, ipython and jupyter.

I personally also added .huggingface to this list, to make sure my HuggingFace credentials are also persisted. See here for the PR back into the main repo.

In the second part of the script we do exactly the same thing, but for a number of files instead of directories.

pre-run.sh (snippet)

for p in .git-credentials .gitconfig .bash_history

do

if [ ! -e /storage/cfg/$p ]; then

touch /storage/cfg/$p

fi

rm -rf ~/$p

ln -s /storage/cfg/$p ~/

doneNow that we understand pre-run.sh, let’s have a look at the second file we store in our /storage folder:

.bash.local

.bash.local

#!/usr/bin/env bash

alias mambai='mamba install -p ~/.conda '

alias pipi='pip install --user '

export PATH=~/.local/bin:~/.conda/bin/:$PATHPaperspace runs this script whenever we open a terminal. As you can see it defines two aliases to easily install things persistently with either mamba (mambai) or pip (pipi).

Any binaries that are installed this way, are installed in ~/.local/bin (through pip) and to ~/.conda/bin/ (through mamba). We need to add these paths to the PATH variable, to make sure we can call them from the command line.

Note on Mamba

At this point you might wonder why we have the Mamba installation at all, since we have seen that the system Python is used. In fact, our Mamba environment is totally decoupled from what we are using in our Jupyter notebook, and installing packages through mamba will not make them available in Jupyter. Instead, we should install Python packages through pip.

So what do we need Mamba for? I guess Jeremy has done this to be able to install binaries that he wants to use from the Terminal. For example, in the videos he talks about ctags which he installs through mamba. Since installing none-Python specific binaries through pip can be complicated, we can use Mamba instead. In other words, we can use it as a general package manager, somewhat similar to apt-get.

Final words

In my opinion Paperspace offers a great product for very fair money, especially if combined with the setup described in this blog!